Welcome to the Ethics & Ink AI Newsletter #24

Your Data Is Being STOLEN. Here’s How You Put A STOP It.

Stop trusting “privacy-friendly” cloud services. Start demanding real control.

Last week, I shared a phenomenal new architecture and framework developed by the researchers at Hangzhou Dianzi University. This report (which you can read here) is something every data protection officer should study, print out and slap on their vendor’s desk: hard proof that you can actually control your data after you share it. Not the fake control most platforms promise you. Real control.

Your Nonprofit’s PRIVACY Policy? Big Tech’s Backdoor to YOUR Data.

Welcome to the Ethics & Ink AI Newsletter #23

The Problem You’re NOT Being Told About

Every “secure” cloud service is lying to you. The moment your data hits their servers, you lose control. Sure, they’ll encrypt it. Sure, they’ll promise GDPR compliance. But when you want to revoke access? When you need to delete specific pieces? When you discover they’re sharing with third parties you never approved?

You’re stuck. They own your data now. The contract you signed made sure of that.

What This New System Actually Does (In Plain English)

The Task-Driven Data Capsule System (TD-DCSS) treats your data like it belongs to you—not them:

Your data stays wrapped in YOUR security container - think of it as a safe that only opens for specific, time-limited tasks you approve.

You issue access tokens, not blanket permissions - instead of giving someone the keys to your house, you give them a specific task like “deliver this package to apartment 3B.”

You can revoke access instantly - not in 30 days, not after their “data review process,” but immediately when you say stop.

Why This Matters Right Now

GDPR isn’t a suggestion anymore. The “right to be forgotten” isn’t a nice-to-have. Enforcement is happening. Organizations are getting fined. And your current cloud provider’s “privacy policy” won’t save you.

The Reality Check: Building This Isn’t Cheap

Building a system like this from scratch is pricey. Here’s what you’re looking at:

Initial development: $350,000-$500,000

Infrastructure setup: $75,000-$150,000

Security auditing: $50,000-$100,000 (required quarterly)

Compliance certification: $30,000-$60,000

Ongoing maintenance: $15,000-$25,000 monthly

Timeline:

Planning and architecture: 2-3 months

Core development: 6-8 months

Security testing and hardening: 2-3 months

Compliance verification: 1-2 months

Total: 11-16 months before deployment

If this isn’t a financial commitment you’re ready to make for your organization, I hear you. Thankfully, here are some very, very simple changes you can make to transform the standards of protection for your mission:

Implement data classification protocols that clearly mark sensitive information

Create an inventory of ALL third-party data processors and review it quarterly

Establish a mandatory 48-hour response window for access revocation requests

Require written confirmation of data deletion from all vendors

Set up automatic data retention limits with your current providers

Conduct twice-yearly data privacy training for all staff

Questions to Ask Your Current Vendors Today

“Can I revoke access to specific data points immediately, not just disable my account?”

“Can you show me exactly what data you’re sharing with third parties, in real time?”

“If I demand deletion under GDPR, how do you ensure my data is actually gone from all your systems and partners?”

Ask these questions and watch them squirm. Most can’t answer these questions because their systems weren’t built for your protection—they were built for their profit.

What You CAN Do Now

Before your next vendor contract renewal:

Demand immediate revocation capabilities in writing

Require real-time data sharing transparency

—and

Insist on granular access controls, not all-or-nothing permissions

Bottom Line

Your current “privacy-preserving” systems probably aren’t. The technology exists to actually protect your people’s data. The only question is whether you’ll demand it or keep accepting vendor theater.

Stop trusting promises. Start demanding proof.

Your compliance deadline isn’t moving. Your vendors’ excuses won’t protect you. But the low-cost technologies revealed in today’s newsletter—just might. Keep reading to find out what your Big Tech brother and sister competitors already know!

When Your Tech Stack Becomes a Weapon Against Those You Serve

Your phone buzzes at 2:47 AM.

“We have a MAJOR problem. That trafficking survivor we recently housed? One of her abusers found her. She called and said he’s been sitting outside her house for the last three hours.”

“What? How? We followed every single protocol—”

“Apparently. . .the admin has been using Dropbox since the file server’s been down, and someone got access to the cloud storage account. When her photo and file got uploaded to that folder, the unauthorized user stole it and apparently sold the information to anyone willing to pay for information on her. The information’s doing numbers right now on a dumpy ExOnlyFans site as we speak. They’ve got a map of our city, some “hot sex spots” and a special top 10 playlist of her best blowjobs. The comments absolutely TURN my stomach.”

“Jesus CHRIST that’s insane. These people are sick.”

“I know. Just absolutely disgusting. And get this, they’ve even posted her new number with a caption that says, ‘Give her a call and bring this black beauty back to the industry.’ I am absolutely SICK over this.”

“ME TOO. Oh my gosh. I have to GO.”

You hang up, stomach churning. Your organization’s “free” tech stack—the tools you use to “help” vulnerable people—just enabled someone else to hunt down, humiliate and harass a recovering cyber-trafficking victim.

Your mind spins and you begin to rethink everything.

How could this have POSSIBLY happened?

The Google Workspace “gift to nonprofits.”

The Salesforce database.

The Microsoft systems processing intake forms.

All monetizing vulnerability data that stalkers and abusers are known to pay premium prices to access.

Your board meeting is in six hours. You need to explain how your organization’s technology choices nearly got someone killed.

This isn’t a hypothetical. It’s happening right now.

Olivia’s Story: When Our Tools Betray Our Mission

She sits across from you, near tears, hands shaking. Twenty-eight years old, just turning her life over to Christ and trying to build a new life, after finally leaving the adult entertainment industry. She’s moved four times in six months, with ZERO dollars left in the bank, after being systematically hunted by someone with access to every platform she’s ever used.

“I thought deleting my accounts would be enough,” she whispers. “But I think he found my new address through Venmo. And then, my work schedule through some Instagram location tags I didn’t even know I was sharing. And now, he knows that I’m HERE because he literally followed me. I don’t know how much longer I can take this. I’m about to LOSE ME JOB!”

She bursts into tears. You want to help. You have housing available. But then you’re hit by this devastating realization: Your own systems—built on platforms that track and monetize every interaction—make you fully complicit in the very surveillance apparatus being weaponized against her this very moment.

Every email about her case. Every shared document with her information. You’re inadvertently feeding data to the ecosystem that enables her stalker.

The Money Truth: What “Free” Actually Costs Your Organization

You’ve been told these platforms are gifts. Here’s what verified research reveals:

Your 25-Person Organization - Annual Reality

Google Workspace: “Free for nonprofits” → $5,000-15,000 addition hidden costs

Microsoft 365: “Donated software” → $6,000-12,000 reality

Salesforce: “$60/user generosity” → $18,000 total with $7,000-30,000 implementation

Plus the other invisible costs:

IT crisis calls: $6,000/year

Staff training on confusing platforms: $4,000/year

Breach risk: $98,000 average cost for nonprofits

Legal compliance scrambling: $8,000/year

True cost of your current complicity: $47,000-63,000 annually

The math is clear: “Free” is the most expensive word in nonprofit technology. When tech giants offer charity, they’re actually selling your organization’s privacy, security, and autonomy at premium prices you never agreed to pay. Stop subsidizing Silicon Valley’s data empire with your mission’s resources. The question isn’t whether you can afford privacy-first protection—it’s whether you can afford to continue without it.

What Privacy-First Protection Actually Costs:

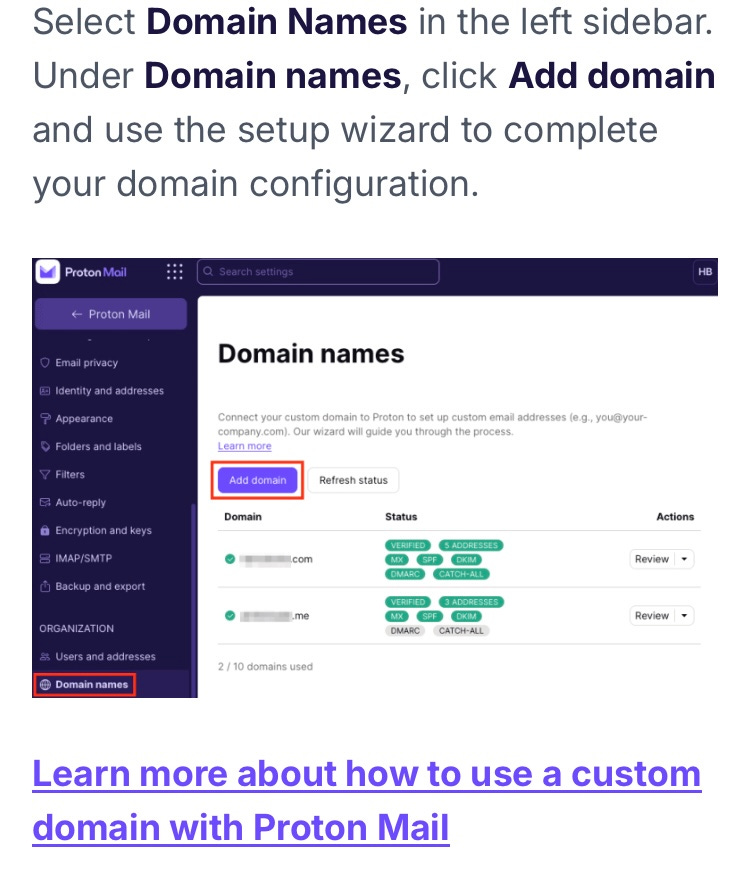

ProtonMail Business: $2,500-4,800 (ISO certified end-to-end encryption for email)

Element Secure Messaging: $900 (End-to-end encrypted collaboration)

Nextcloud Self-Hosted: FREE-$500 (Complete data sovereignty)

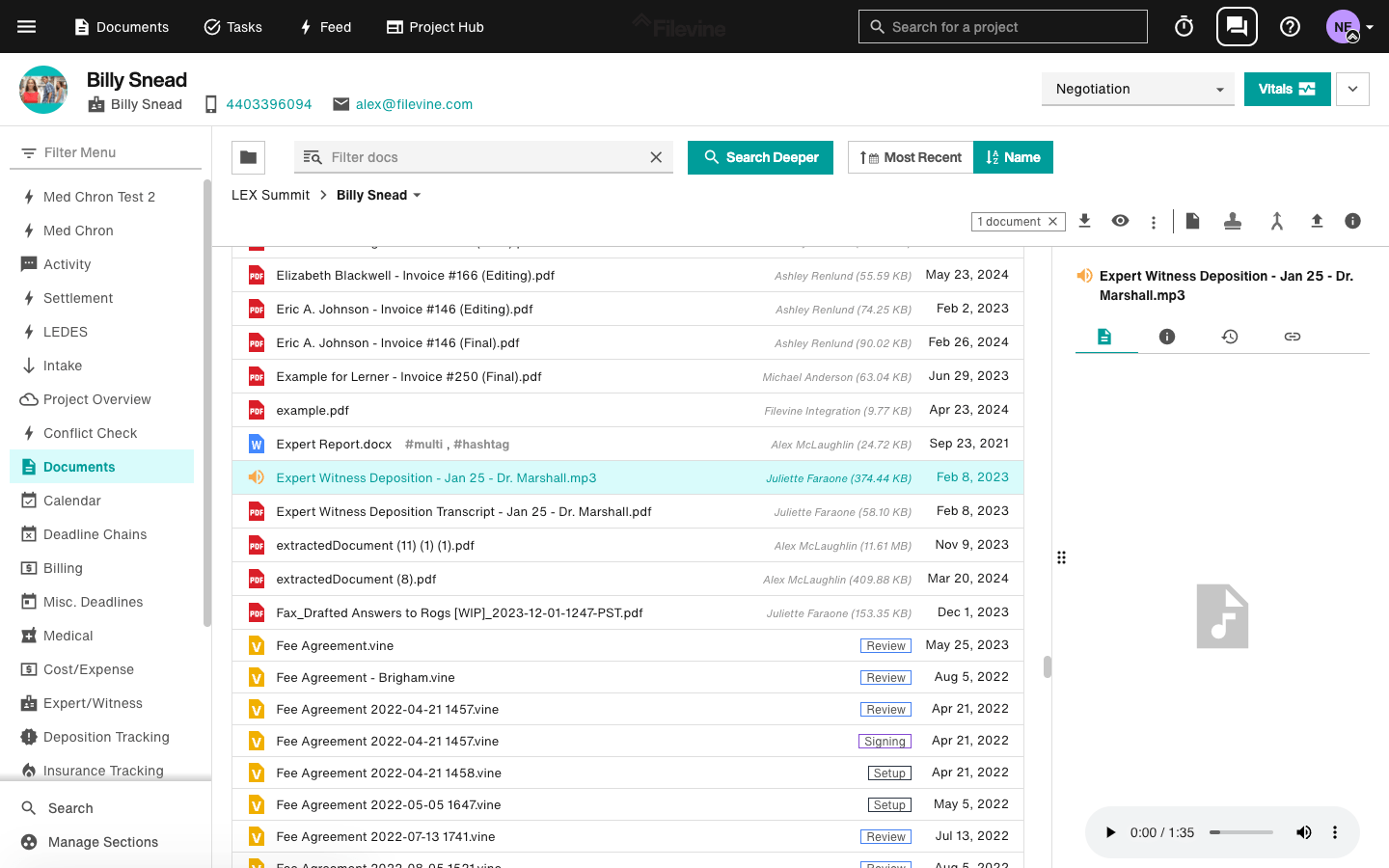

Filevine - Privacy-First AI-Enhanced Case Management Systems : $3,000-8,000 (though initially developed for legal practices, this solution adapts seamlessly to nonprofit use, including medical and healthcare support organizations, while maintaining full HIPAA+ compliance)

Your Protection Investment: $6,400-14,300 annually

Investing in privacy isn’t an expense—it keeps your mission insured. At $6,400-14,300 annually, true data protection costs a fraction of what “free” platforms silently extract from your organization. This isn’t just about saving $33,000-49,000 each year; it’s about reclaiming control of your mission’s most valuable asset: trust. When donors, clients, and communities know you’ve chosen protection over exploitation, they don’t just support your work—they become advocates for your vision. Privacy-first technology isn’t a luxury for nonprofits—it’s the foundation that ensures your mission remains yours, and yours alone.

5-Year Reality Check:

Path you’re on now: $235,000-315,000 + damages

Privacy-first path: $32,000-71,500 + actual safety

Your Freedom: $203,000-243,500 to spend on programs instead of maintaining various kinds of low-tech surveillance apparatuses

The Pattern You Can’t Unsee: The Surveillance-to-Violence Pipeline

Most nonprofits are unknowingly running surveillance operations disguised as helping programs.

Every day you use these “free” platforms, you participate in:

Data Collection: Platforms harvest your client’s information for their use.

Profile Building: Algorithms are the created to map your community’s vulnerabilities.

Data Marketplace: Information in your community inevitably flows over to brokers and from there, to corporate marketing firms, political interest groups, political operatives and yep, bad actors.

Exploitation Access: “Talent” poachers, stalkers, and abusers gain intelligence on who can be most easily used.

Retraumatization: Your clients can’t escape digital footprints you helped create, finding themselves inadvertently locked with another endless and highly technological system designed purely to manipulate, switch and rebait.

Now, don’t get me wrong. You aren’t the villain here. You’re the hero who’s been given broken tools and told they’re gifts.

The Legal Storm Coming Your Way

Twenty states now have comprehensive privacy laws, with seven explicitly applying to nonprofits.

Your Regulatory Timeline:

2025: Oregon privacy law applies to nonprofits (July 1)

2025: Universal opt-out mechanisms required in Connecticut, Texas, Montana, Nebraska

2026-2027: Multiple states implementing comprehensive requirements (despite the moratorium, and frankly—good. )

Colorado’s violations now result in immediate penalties up to $2,000 per violation per consumer, with maximum fines of $500,000.

Strategic Planning vs. Panic:

Legal preparation: $3,000 vs. $15,000

Implementation: $12,000 vs. $35,000

5-year compliance: $8,000 vs. $60,000

Penalty risk: Minimal vs. up to $500,000

The Big Tech Reality Check

Microsoft 365: Free Office 365 E1 and Business Premium grants discontinued July 2025. Now requiring annual prepayment with 5-20% surcharges for monthly options. Volunteers explicitly cannot use nonprofit licenses.

Google Workspace: Free for nonprofits, but privacy policies identical to commercial accounts with no enhanced protections for sensitive information.

Salesforce: Implementation costs ($7,000-30,000) typically exceed the value of free licenses.

The Data Breach Reality Check

For cash-strapped nonprofits already stretched thin, cyberattacks deliver a devastating financial blow—with insurance claims averaging $98,000 per incident and ransomware attacks inflicting even steeper losses at $172,000, potentially wiping out months of donor contributions and forcing organizations to divert critical mission funding toward recovery efforts.

Real examples:

International Committee of Red Cross Breach: The 2022 International Committee of Red Cross (ICRC) breach compromised sensitive data of 515,000+ vulnerable individuals, resulting in approximately $5.2 million in incident response costs, including $2.8 million for forensic investigation and remediation. The ICRC faced potential regulatory penalties up to €20 million under GDPR guidelines, though as an international organization, they received adjusted sanctions. Additional costs included $1.7 million for victim notification and support services, plus an estimated $3.4 million in reputational damage and lost donor contributions. The organization implemented a $6.5 million cybersecurity enhancement program following the incident.

The Arc of Erie County HIPAA Penalties: The Arc of Erie County paid $200,000 in HIPAA penalties for a breach affecting 3,751 clients, equating to $53.32 per compromised record. The settlement included a $150,000 direct fine to the Office for Civil Rights (OCR) and $50,000 allocated to a mandatory three-year corrective action plan with quarterly compliance reporting requirements costing approximately $75,000 annually. The organization also incurred $125,000 in breach notification expenses, $90,000 for credit monitoring services, and faced a 42% increase in their cyber insurance premiums for the following three years, representing an additional $210,000 in indirect penalty costs.

Cyber Insurance Costs and Requirements: Cyber insurance costs average $1,740 annually for small businesses (1-10 employees) with a $5,000 deductible per claim and $1 million coverage limit. Policy requirements include multi-factor authentication ($2,500-$7,000 implementation cost), comprehensive patch management systems ($3,600-$9,200 annually), and encrypted backup solutions ($4,800-$12,000 annually). Non-compliance with these security requirements results in claim denials, while security incidents trigger premium increases of 30-150% upon renewal. Sublimits for regulatory fines often cap at $250,000, leaving organizations exposed to potentially significant out-of-pocket expenses for larger breaches.

The numbers don’t lie: data breaches aren’t just security incidents—they’re existential threats to your nonprofit’s survival. When a cyberattack strikes, it doesn’t just compromise data; it compromises your entire mission, diverting funds meant for communities in need toward legal fees, investigations, and reputation repair. With average costs approaching six figures per incident and regulatory penalties that can reach into the millions, the question isn’t whether you can afford proper cybersecurity—it’s whether you can afford to operate without it. Your donors trust you with their contributions; your clients trust you with their data. Break that trust once, and the true cost becomes incalculable. Protection isn’t an IT expense; it’s the invisible foundation that keeps your mission possible.

Your 12-Month Transformation Path

In today’s digital landscape, your organization isn’t just vulnerable—it’s marked for cyber-sabotage. Hackers have likely already probed your defenses, looking for any weak point that allows them to silently drain your client data for months before you ever detect the breach. When that devastating headline bears your firm’s name, clients won’t just leave—they’ll flee, taking referrals and reputations with them as regulatory fines eviscerate what remains of your business. Yet lurking within this nightmare scenario is an incredible opportunity. This 12-month transformation journey doesn’t just shield your practice from harm—it positions you as a beacon of integrity in an industry where privacy has become the ultimate currency. While competitors scramble to patch vulnerabilities after breaches occur, you’ll be methodically building a fortress of client confidence that generates tangible returns. The choice is stark: remain exposed to escalating threats or emerge as a leader whose commitment to protection becomes your most powerful competitive advantage.

Months 1-3: Foundation ($4,000-6,000)

Replace your communication systems with encrypted alternatives

Train your staff on privacy-first practices

Begin client data audit and protection protocol

Months 4-6: Core Migration ($3,000-8,000)

Implement a privacy-first case management system

Create data classification protocols for each case manager to use

Establish the legal compliance framework you’ll follow in case of a crisis

Months 7-9: Integration ($2,000-3,000)

Optimize workflows for maximum protection

Complete security audit and penetration testing

Refine governance processes

Months 10-12: Leadership ($500-1,000)

Document best practices for the sector

Begin thought leadership based on transformation results

Establish success metrics

Total: $9,500-18,000

Annual Savings: $20,000-45,000

Your Sacred Call to Action

Olivia found safe housing only because she had the courage to keep asking for help despite the digital damages and dangers we’d unknowingly created.

But in reality, she shouldn’t have had to be that brave.

And the next survivor shouldn’t have to choose between getting help and staying safe. The next trafficking victim shouldn’t discover your “confidential” intake form feeds data to platforms that enabled and encouraged their harm and exploitation.

Your mission demands this decision. Your values support it. Your budget overwhelmingly justifies it.

Every day you wait, your systems enable the surveillance that harms vulnerable people. Every month you delay is money paid to Big Tech instead of investing in programs that actually serve your clients needs.

The compassionate choice isn’t avoiding this difficult transition. The compassionate choice is doing whatever it takes to actually protect the people that trust you.

Your Next 72 Hours

Every day, someone like Olivia walks through your doors, clutching their last shred of hope between trembling fingers. They’ve been tracked, monitored, and exploited—not just by people who venomously wish them harm, but unwittingly by the very systems designed to protect them. Behind each case number in your database is a person desperate for safety, dignity, and a chance to rebuild without digital shadows following their every move. The technological decisions you make in the next three days won’t just change your organization’s security posture—they’ll determine whether your next client finds freedom or remains trapped in a cycle of vulnerability that no amount of well-intentioned support can overcome.

Hour 1-24: Recognition

Share this newsletter and cost analysis with your leadership

Calculate your actual current costs

Schedule a strategic session with your decision makers

Hour 25-48: Exploration

Request demos from privacy-first providers

Research case management solutions for vulnerable populations

Review current vendor contracts for data exploitation clauses

Hour 49-72: Decision

Present the financial analysis

Get authorization for the implementation investment

Schedule Week 1 of your transformation

The people you serve can’t wait for you to feel ready. They need you to be brave enough to protect them properly.

Olivia now lives in stable housing. She found work with an organization that doesn’t track her every move. Her stalker lost the trail when she learned to use privacy-first tools.

She’s not just surviving anymore. She’s thriving and genuinely free.

Your next client could be too.

Thank you for reading!

This analysis is based on verified organizational data, independently confirmed pricing information, and documented privacy legislation research as of August 2025.

When “We Know What’s Best for You” Just Isn’t Good Enough Anymore

The Chapter That Makes Sneaky AI Vendors SWEAT!

Remember when your doctor had to explain a procedure before you agreed to it? Turns out that wasn’t just medical courtesy—it was preparing you for the most important fight of the digital age. Because right now, algorithms are making life-changing decisions about you, and most of the time you never even knew you were agreeing to let them.

This chapter isn’t about demanding that every AI system ask your permission before it breathes. It’s about establishing your fundamental right to know when artificial intelligence is being used to evaluate your mortgage application, scan your resume, or categorize you as a “high-risk” insurance customer—and your right to say no.

Why This Law Makes AI Companies Squirm The Most (And Why You Should Care)

While tech executives love hiding behind terms like “user agreements” and “terms of service,” this chapter cuts through the corporate smokescreen with surgical precision. The 2nd Law of Consent establishes a simple principle that somehow terrifies billion-dollar companies: If an AI system affects your life, you deserve to know it’s happening and have a real choice about it.

This isn’t about slowing down innovation—it’s about demanding that the people building these systems actually get your permission before using them to judge, categorize, and make decisions about your life.

The Voice That Makes Complex Ideas Click

Forget dense academic papers that require a computer science degree to decode. This chapter talks to you like that brilliant friend who can explain quantum physics using pizza analogies—smart, accessible, and just irreverent enough to keep you awake.

Sample line that captures the essence:

“When your doctor recommends surgery, they don’t just schedule you—they explain what’s happening and get your signature. So why do we accept ‘We’re using AI to optimize your experience’ without knowing what that actually means?”

Why This Chapter Hits Different

We’re living in an era where “artificial intelligence” has become the ultimate permission-skipper. Companies deploy AI to analyze your behavior, predict your choices, and influence your decisions, all while pretending you somehow agreed to this in some buried clause of a terms-of-service document you never read. This chapter arms you with the knowledge to demand real informed consent for algorithmic decision-making.

You’re ready for content that’s intellectually honest without being academically pretentious, critical without being paranoid, and empowering without requiring you to become a data scientist.

The Problem This Chapter Actually Solves

Current discussions about AI consent fall into predictable traps:

The Tech Apologists: “Users already agreed when they clicked ‘Accept’—that’s consent!”

The “Freedom” Fighters: “Requiring explicit consent will kill innovation and user experience!”

The Legal Theorists: “Here’s a 200-page framework for algorithmic consent mechanisms!”

What’s missing? A chapter that treats informed consent like any other consumer protection—something you deserve as a basic right, not a legal technicality. This chapter delivers that perspective with the diplomacy of a sledgehammer through corporate privacy policies.

The Audience That’s Been Waiting for This

This chapter speaks directly to the enormous group of people who’ve been told to just trust that companies have their best interests at heart:

Job seekers whose applications get fed into automated screening systems they never knew existed.

Patients whose treatment gets influenced by predictive algorithms they never consented to.

Small business owners whose loan applications get processed by AI risk assessment tools they were never told about.

Anyone who’s ever discovered that AI was making decisions about their life without their knowledge or permission.

Think: professionals who use technology daily but refuse to be experimented on without their knowledge. People who understand that “It’s in the terms of service” is usually code for “We’re hoping you won’t notice.”

The Structure That Delivers Real Understanding

This chapter follows the battle-tested formula that makes complex topics addictive:

Opening Salvo: A jaw-dropping real-world example of AI being deployed without people’s knowledge or consent (spoiler: it involves someone just like you)

The Uncomfortable Reality: How companies currently slip AI into your daily life without asking

The 2nd Law Decoded: What meaningful informed consent for AI actually looks like in plain English

Your Personal Action Plan: Concrete steps to identify when AI is being used on you and how to demand real choice

It’s structured so you can absorb it in one sitting or reference specific sections when you’re trying to figure out if that company actually has your permission to use AI on your data.

Why This Chapter Changes Everything

Here’s what makes this different from every other discussion of AI privacy: it gives you actual tools to reclaim control. Not theoretical frameworks or legal wish lists—practical strategies you can use tomorrow when companies try to slip AI into their services without your knowledge.

This chapter transforms you from an unwitting test subject for algorithmic experiments into an informed participant who gets to choose when and how AI affects your life.

The Marketing Controversy That Writes Itself

This chapter will generate the kind of heated debates that drive book sales and speaking engagements. Every section provides ammunition for op-eds, podcast appearances, and very uncomfortable corporate board meetings.

Headlines that’ll grab attention:

“Why AI Companies Are Afraid to Ask Your Permission”

“The Consent Crisis That Tech Giants Hope You’ll Ignore”

“Your Right to Say No to Algorithmic Decision-Making”

The Bottom Line That Matters

Chapter Two delivers exactly what you need: a clear understanding of why informed consent for AI matters, practical knowledge about how to recognize when it’s missing, and the confidence to push back when someone tries to deploy AI on your life without your permission.

Most importantly, it gives you the language to discuss AI consent without getting lost in legal jargon or corporate deflection.

The Promise: After reading this chapter, you’ll never again let companies use “We’re optimizing your experience with AI” as an excuse to skip asking for your actual consent.

Ready to Take Back Control?

This chapter is your guide to AI consent—delivered with enough wit and wisdom to make the medicine go down smooth, and enough substance to change how you interact with AI-powered services forever.

Because in a world where machines make increasingly important decisions about human lives, demanding informed consent isn’t just reasonable—it’s revolutionary!

Get Your Copy Today!

Available now on:

Kindle 📲

—and in

Don’t let algorithms make decisions about your life without understanding what’s really happening. Get the battle plan you need to navigate the AI age with your diligence and dignity intact!