😨 IDSS - A Recipe for AI Agent Catastrophe, or the NEW Secret Sauce?

Understanding IDSS, Correctness Learning & Deductive Verification Guided Learning for Human-AI Collaboration

“[People] should be governed and lead, not so as to become slaves, but so that they may freely do whatsoever things are best."

— Baruch Spinoza, Ethics —

In the shadows of political tyranny and religious dogma, Baruch Spinoza stood as a beacon of reason in the 17th century, daring to dream of a world guided by wisdom, freedom and the sincere desire for the greatest good. In his time, he spoke against the forces that sought to bind the human spirit, lighting a path to enlightenment and fully just governance.

Today, we find ourselves at a similar juncture in time, as intelligent decision-support systems (IDSS) begin to weave into the very fabric of our lives. From how we heal to how we power our world, these systems hold the potential to shape our destiny. Yet, Spinoza's call for governance that uplifts rather than enslaves rings with renewed urgency, urging us to steer these innovations with a greater moral compass.

The quest for "correctness" in IDSS goes beyond mere numbers and algorithms. It beckons us to consider the deeper values and judgments that define our humanity. These systems, no matter how advanced, must echo the dreams and hopes of those they serve.

Reinforcement learning from human feedback offers a vision of machines that mirror our collective conscience. Yet, it is fraught with the shadows of human imperfection and bias, challenging us to sculpt decision models that truly serve the common good.

On the other hand, formal verification techniques—deductive verification, model checking, and abstract interpretation—stand as pillars of integrity, promising reliability and trust. Entering this uncharted realm with courage and vision, we must redefine correctness with an ethical heart and operational wisdom. This journey isn't merely technical; it's a moral imperative, a step toward crafting systems that resonate with Spinoza's timeless vision of liberation, not confinement.

Will IDSS become another cautionary tale, or is it the key to unlocking a new era of nearly perfect intelligence? The answer lies in our hands, guided by the principles of Spinoza, to choose wisely.

Introduction

As I explored this captivating new paper, its profound implications for the future of human-AI interaction became immediately apparent. Published on March 10th by researchers from Zhengzhou University and the University of Electronic Science and Technology, it presents a bold vision for "correctness learning." This framework aims to bridge the gap between the logical realm of deductive verification and the nuanced, context-dependent world of intelligent decision-making.

Central to their exploration is the question: What does "correctness" truly mean in the era of intelligent decision-support systems (IDSS)? Is it merely about achieving algorithmic accuracy, or does it demand a deeper understanding of the human values and biases that shape our judgments?

The authors tackle this question with both daring and humility, acknowledging the enduring challenge of verifying decision outputs—a puzzle that has long confounded even the brightest minds in AI. Yet, they propose a new framework that unites deductive logic with the adaptive, learning-driven realm of decision-making.

At its core is "Pattern-Driven Correctness Learning" (PDCL), a method that models and reasons about the adaptive behaviors or correctness patterns of intelligent agents. But what exactly are these patterns? How do they reflect the values and priorities that should guide us? Can we truly capture the subtle, context-rich factors that define the "correctness" of an outcome?

The framework's captivating visual, depicted in Figure 1, illustrates a two-stage process that combines the exploratory power of hierarchical reinforcement learning with the precision of deductive reasoning. This approach aims to guide decision models towards correctness patterns found in historical high-quality schemes.

As I consider this approach, I am acutely aware of the significant challenges that lie ahead. With IDSS becoming integral to society, understanding their ethical and operational principles is more urgent than ever. Missteps could lead to disaster, while success may usher in a new era of human-AI collaboration that genuinely serves the greater, common good.

What insights or breakthroughs might this "correctness learning" framework bring? How could it transform the way we design, deploy, and interact with IDSS in critical domains like smart manufacturing, medical supply, transportation, and energy management? We shall see. The stakes have never been higher.

Reinforcement Learning for Human Feedback & Safe Reinforcement Learning

As I explored the depths of this intriguing paper, I was struck by its profound implications for the future of computational design. The authors introduce "Reinforcement Learning for Human Feedback," a concept that hints at a harmonious partnership between humans and machines. But what do we truly seek in this union? Are we projecting our biases onto these intelligent agents, or are we on the verge of a new era of symbiotic decision-making that could revolutionize our approach to design challenges?

Then we venture into the realm of "Safe Reinforcement Learning," which unfolds into three distinct approaches:

Constrained Reinforcement Learning: This approach aims to ensure that the agent’s behavior satisfies certain constraints. But the question is what are these constraints, and how do they align with the values and aspirations of the people these systems are meant to serve? Are we merely reimposing our own biases, or are we truly capturing the essence of what it means to act responsibly and ethically?

Shielded Reinforcement Learning: By combining formal verification with reinforcement learning, this method seeks to verify the safety of the agent’s behavior. Yet, as the authors note, these existing approaches only focus on process reliability, limiting the decision path to a range of satisfying properties. How can we move beyond these constraints and truly embed the “correctness” of the decision results into the heart of the decision-making process?

Risk-Constrained Policy Gradients: These methods aim to learn policies that are robust to risks. But what constitutes “risk” in the context of intelligent decision-support systems? Are we merely optimizing for numerical metrics, like financial or operational risk, or are we considering the deeper societal implications of these systems’ actions? How can we ensure that the policies we learn truly prioritize the well-being and flourishing of the communities they serve, rather than simply minimizing abstract notions of risk?

For beginners in computational design, these research areas offer an exciting glimpse into the future of human-AI collaboration. The real challenge, however, lies in transcending current limitations and forging a path that seamlessly integrates deductive verification into decision-making. Only then can we unlock these tools' true potential, harnessing them for a more harmonious, equitable, and prosperous world.

By shifting focus from the intricate task of formalizing decision-making to rigorously verifying decision outputs, we encounter a new frontier in human-AI collaboration. What might this approach mean for the world of computational design on the horizon?

Problem Formulation

In the intriguing realm of computational design, one frequent challenge is to optimize and efficiently complete a set of tasks using limited resources. Section 3 introduces a model that addresses this scheduling and resource allocation problem.

The core elements of this problem are:

Operations: These are the individual actions or jobs that need to be carried out, such as moving an object or assembling a component.

Tasks: Tasks consist of a series of operations that must be performed in a specific order to achieve a larger goal, like building a product or executing a manufacturing process.

Equipment: These are the resources available to perform operations, including specialized tools and machines.

Cars: Mobile resources used to transport items or components between locations where operations are performed.

Job Scheduling: This is the strategic allocation of available equipment and cars to the operations within tasks to minimize the total time required for completion. The goal is to optimize resource use and ensure tasks are completed efficiently.

The challenge lies in assigning the right equipment and cars to complete tasks, reducing the total completion time. For those new to computational design, this section illustrates how complex real-world problems can be systematically approached and solved using structured models and decision-making strategies.

To better understand this process, let's think of this job scheduling problem as a fun game, and then I'll draw a parallel to how the Intelligent Decision-Support System (IDSS) operates in a quantum computer equipment and supply company.

Imagine you're the manager of a dynamic quantum computing facility, where you have various tasks that need to be accomplished. Each task includes a series of smaller steps, such as assembling quantum processors, calibrating systems, testing performance, and packaging units for delivery.

To complete these tasks, you have a specialized team (the equipment) – a technician for assembly, a calibration expert, a quality assurance specialist, and a logistics coordinator. You also have a fleet of delivery vehicles (the transport systems) that can move the quantum hardware and supplies to research institutions and tech companies.

Your goal in this game is to determine the most effective way to assign tasks to your team and schedule the delivery vehicles, ensuring that all projects are completed as quickly as possible. You need to consider factors like:

Which team member is best suited for each step of the task?

How long will each step take?

When do the delivery vehicles need to dispatch the equipment?

How can you minimize the time your team spends waiting for components or information?

As the manager, you are responsible for making all the decisions regarding task scheduling and resource utilization. It’s up to you to devise the fastest, most efficient plan to complete all projects.

Now, let’s relate this game to the IDSS system discussed in the report.

The IDSS functions like the "manager" of a complex quantum computing supply chain. Just as you, the facility manager, must make decisions about the optimal use of available resources (equipment and vehicles) to complete a series of tasks (operations), the IDSS aims to enhance efficiency in similar processes.

The IDSS employs advanced algorithms and machine learning models to analyze available data, much like how you must consider the capabilities of your team and the timing of delivery vehicles. The goal is to identify the best scheduling and resource allocation strategies to reduce the total time and cost of fulfilling all projects.

However, unlike a human manager who might rely on intuition and experience, the IDSS requires a high level of accuracy and reliability in its decision-making. This is where the deductive verification approach proposed in the report comes into play – it serves as a method to formally verify the correctness of the IDSS’s decision-making process, akin to how you would meticulously plan and review your decisions to ensure smooth operations in the facility.

Thus, the job scheduling game you’re playing is a simplified reflection of the real-world challenges faced by IDSS systems in safety-critical environments, like quantum computing supply chains. By grasping this game, you can begin to appreciate the significance of the work outlined in the report aimed at improving the reliability and trustworthiness using the IDSS framework!

Algorithm: The IDSS Design

In section 4 of the report, the researchers develop the IDSS that makes the intelligent decisions for a job scheduling problem using a hierarchical reinforcement learning (HRL) approach. This system is meticulously designed to ensure that every decision made is both efficient and safe.

The HRL model consists of two main components:

1. The Lower-layer Model: Responsible for allocating available resources, such as cars and equipment, to complete individual tasks. It learns through trial and error to efficiently assign the right resources to the right tasks and is rewarded for making good decisions.

2. The Upper-layer Model: Focuses on deciding the overall order and prioritization of tasks. It takes a high-level view to determine the best sequence of tasks for job completion and is rewarded for making decisions that enhance speed and efficiency.

In addition to the HRL model, the researchers incorporate deductive verification techniques. This involves formally modeling and analyzing the system’s behavior using mathematical reasoning. They utilize a modeling language called MLJSS to describe resource allocation and management operations within the job scheduling system. This enables a symbolic analysis of patterns and priorities in the decision-making process.

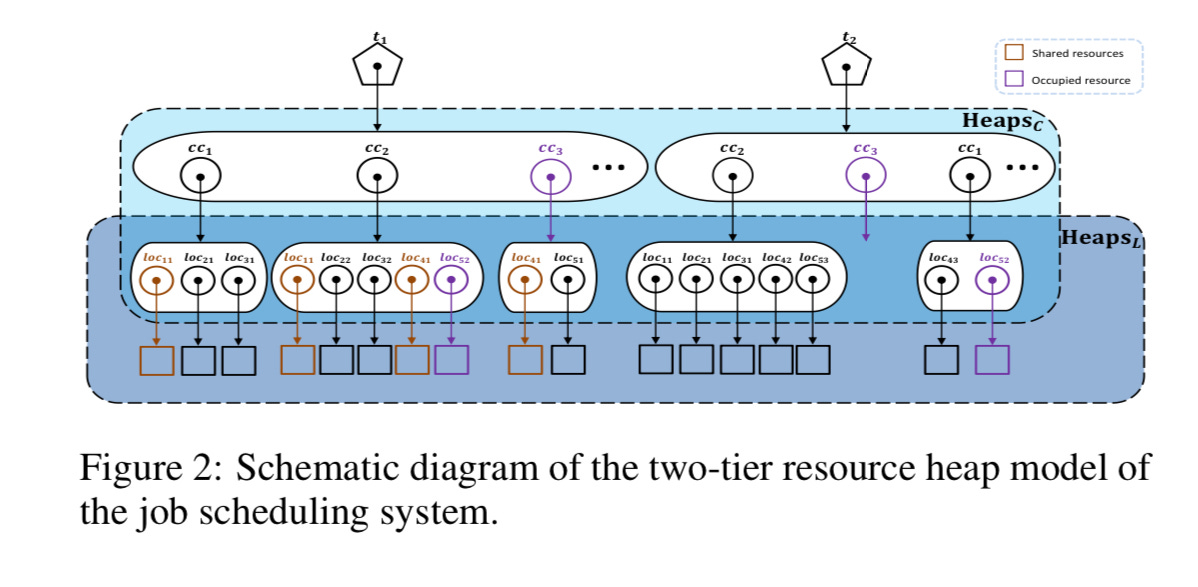

Figure 2 illustrates the two-tier resource heap model used in the job scheduling system. This schematic diagram shows how resources are allocated and managed, depicting the relationship between shared and occupied resources. The model effectively captures how tasks, cars, and equipment are dynamically coordinated to ensure optimal resource usage.

Figure 3 presents a simplified modeling program of the historical high-quality schemes. It demonstrates how tasks, cars, and locations are allocated and managed over time. The program visually represents the sequence of operations, showing how resources are planned, assigned, and freed as tasks progress. This helps in understanding the dynamic implementation process of the scheduling system and the underlying logic that drives decision-making.

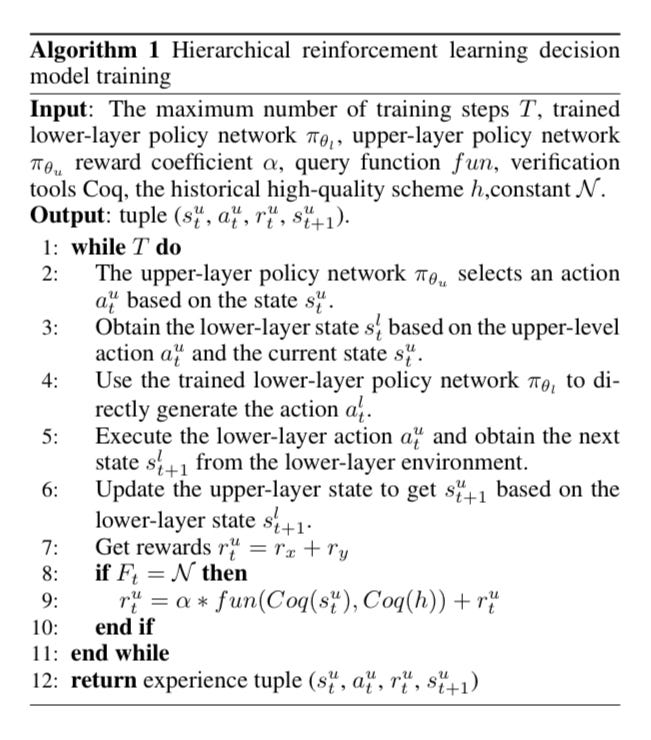

Algorithm 1 outlines the step-by-step process that the IDSS follows to generate and verify job schedules. This algorithm describes how the system navigates through different scheduling possibilities, applies the HRL model, and incorporates verification checks to ensure the safety and efficiency of the generated schedules.

The IDSS further embeds a formal verification framework that rigorously validates the safety and correctness of job scheduling decisions. This framework includes:

Formal System Model: Constructing a detailed mathematical model of the entire job scheduling system, including tasks, equipment, vehicles, and operational parameters, serving as the verification foundation.

Model Checking: Exhaustively exploring the state space of the system model to ensure that all possible schedules satisfy safety and liveness properties, preventing unsafe outcomes.

Abstract Interpretation: A static analysis technique to mathematically prove the absence of runtime errors or constraint violations across potential schedules.

Compositional Verification: Breaking down complex systems into smaller components for independent verification, then composing the results to ensure overall system safety.

The IDSS is tightly integrated with optimization algorithms, constrained to generate only schedules that have been verified as safe and correct. A feedback loop allows the system to continuously learn and adapt its strategies based on real-world performance, ensuring it remains responsive to environmental changes.

By merging advanced optimization techniques with rigorous formal verification, this IDSS signifies a major advancement in developing trustworthy, safety-critical decision-support systems. The computational nuances of its architecture and algorithms are vital to unlocking its potential for high-stakes applications!

The IDSS Design: Optimization and Verification

As computational design beginners, we can learn a great deal from the researchers' masterful IDSS design presented in this paper. The level of sophistication and attention to detail is truly captivating.

At the heart of this design lies a hierarchical reinforcement learning model - a symphony of decision-making prowess. The lower layer expertly handles resource allocation, while the upper layer orchestrates task prioritization. Each component plays its part seamlessly, driven by the ultimate goal of efficiency and safety.

But the true genius lies in the researchers' incorporation of deductive verification techniques. It's as if they've executed a grand strategic chess move, formally modeling the system's behavior and symbolically analyzing the underlying patterns and priorities. This process is akin to peering into the very soul of the decision-making process, unlocking a deeper understanding of the system's inner workings.

The two-tier resource heap model is a schematic diagram that speaks volumes. The intricate dance of shared and occupied resources, visualized with such precision, is a testament to the researchers' mastery of computational design. It's a window into the system's complex dynamics, a blueprint for aspiring IDSS designers to study and emulate.

Furthermore, the modeling program of the historical high-quality schemes is a veritable playbook of winning moves. The sequence of operations, the resource allocation and management - it's a treasure trove of insights into the logic that drives this system. A strategic resource for any computational designer seeking to elevate their IDSS capabilities.

The algorithm laid out by the researchers is a blueprint for success. The way it navigates the scheduling possibilities, applies the HRL model, and incorporates those verification checks - it's a masterclass in risk mitigation and performance optimization. A true testament to the power of blending advanced computational techniques.

The formal verification framework they've built is the ace up their sleeve. Modeling the system, checking the state space, interpreting the abstractions, composing the components - it's a multilayered defense against any potential pitfalls. A safeguard that ensures the robustness and reliability of the IDSS design.

Ultimately, this IDSS design is a shining example of what's possible when computational design meets strategic mastery. By seamlessly blending advanced optimization techniques with rigorous formal verification, the researchers have created a decision-support system that is as efficient as it is trustworthy. A true inspiration for computational design beginners, showcasing the transformative potential of this discipline.

Conclusion

In closing, could IDSS be the new secret sauce for crafting the new impeccable and highly ethical AI? It just might be! As we dive into the lively world of artificial intelligence, Spinoza's insight lights the way for designing systems that truly serve humanity. Imagine an elegantly crafted algorithm that guides without restraining. Our AI frameworks must rise beyond mere control, carving pathways for optimal decision-making that enhance human freedom and dignity.

Picture a world where machines running our vital systems are committed to our highest ethical values. A world where decisions by advanced smart systems go beyond efficiency, crafted to nurture everyone's well-being.

Visualize a healthcare system where treatment plans arise not just from cold algorithms but from a deep understanding of moral philosophy and a commitment to optimal care. This smart system examines every data point, medical history, and research breakthrough, ensuring paths that maximize positive outcomes for individuals and communities.

Envision a transportation network where autonomous vehicles move not just for speed but with a pledge to serve and protect the most vulnerable. This system, grounded in strong ethics, prioritizes safety for pedestrians and cyclists, earning public trust.

Imagine an energy grid where renewable resources are managed with a drive for energy justice and environmental care, free from profit motives. This smart system, armed with greatest insight, makes decisions that protect the most vulnerable populations and secure a sustainable future.

These visions aren't dreams—they're glimpses of a computational future now within reach. A future where machines are not only efficient but fundamentally good, with ethics woven into every decision. Together, we can turn these aspirations into reality. Let's embark on this journey, crafting a world where technology uplifts our humanity and lights the way to a brighter AI-driven future!

Read the entire report here:

IDSS isn’t the problem.

The problem is incentives.

AI isn’t good or bad—it just optimizes.

Great breakdown of IDSS! The challenge of balancing AI safety with meaningful progress is one I’ve been thinking about a lot in my own work on intelligence structuring. If AI safety frameworks become rigid bureaucracies, they could stifle the very intelligence we need to guide AI toward alignment. Do you think IDSS has the potential to adapt dynamically, or is it another top-down system that risks missing the bigger picture?