FIGHTING BACK: Privacy-First AI for Nonprofits Under Attack

Welcome to the Ethics & Ink AI Newsletter #25

The Battle Lines: Privacy Statistics Your Vendors Don’t Want You To Know

While you’ve been focused on your mission, your organization has been hemorrhaging trust, data, and money. 68% of nonprofits experienced data breaches in the last three years, with breaches going undetected for 467 days—that’s over a year of your beneficiaries’ most intimate details exposed. Your AI tools? Digital time bombs: 97% of organizations hit by AI security incidents lacked proper access controls.

Why Your Nonprofit’s Tech Stack Is a Ticking—TIME BOMB! (Must Read)

Welcome to the Ethics & Ink AI Newsletter #24

The financial devastation is immediate: $200,000 average breach costs—money that should’ve funded your programs. Yet 70% of nonprofits have no cybersecurity policies, and 76% have no AI policies despite widespread adoption. You’re flying blind with your most vulnerable communities strapped to your back.

Donor trust evaporates: 69% express serious concerns about data security, retention has collapsed to 35%, and when privacy failures happen, up to two-thirds of constituents never trust you again.

Three Casualties of Surveillance Capitalism: Nonprofits That Didn’t Fight Back

Complete Organizational Death: Pareto Phone’s 2024 ransomware attack exposed 150GB of sensitive data from 70+ charities affecting 50,000+ donors. Within months the company shut down, 150+ employees jobless with one day’s notice, donor records scattered across the dark web, and dozens of nonprofits left scrambling.

Direct Harm to Vulnerable People: NEDA replaced human helpline workers with an AI chatbot that recommended weight loss strategies to people with eating disorders—potentially triggering deadly relapses. The public outcry forced shutdown of both the chatbot and human helpline, leaving an entire community without critical support.

Third-Party Catastrophe Betrayal: Catholic Health System’s AI vendor Serviceaide exposed protected health information of 480,000+ individuals for months. The nonprofit now faces federal lawsuits seeking millions—proof that trusting third-party AI without oversight can bankrupt your organization and destroy those who trusted you.

Reconnaissance Report: The Privacy Threat Landscape

These aren’t isolated incidents—they’re previews of what’s coming for every nonprofit that continues to treat privacy as an afterthought while deploying AI systems they don’t understand to manage data they can’t protect.

Chapter 3 of my brand new book “The 10 Laws of AI: A Battle Plan for Human Dignity in the Digital Age” doesn’t just take another jab at Privacy—it’s the first declaration of war on the kind of surveillance capitalism that’s turning human dignity into profitable shareholder value, while leaving the most vulnerable in dire and desperate need. Here’s what every nonprofit leader needs to know:

Your beneficiaries’ data is being treated like garage sale merchandise while companies know more about their daily habits than their own families

“Free” services aren’t free—they’re psychological strip-mining operations disguised as convenience

Every privacy breach is a human rights violation that could destroy the lives of the vulnerable populations you serve

Military-grade systems-based protection isn’t optional anymore—it’s the minimum standard for organizations that claim to care about human dignity

The current system has turned people into products, and your silence makes you complicit in digital colonization

Ready to fight back? Let’s explore how cutting-edge research is giving us the weapons to reclaim human dignity in the digital age—and why your nonprofit’s survival depends on leading this charge!

Frontline Intelligence: The Breakthrough Research Nonprofits Need Now

While Big Tech has been building AI agents with the security measures of Swiss cheese, researchers from Tsinghua University, University of Melbourne, Cornell University, and Beihang University have been quietly developing a solution that could transform how nonprofits protect their beneficiaries. Their breakthrough research, “Towards Aligning Personalized Conversational Recommendation Agents with Users’ Privacy Preferences,” isn’t just another academic paper—it’s a blueprint for nonprofit AI systems that actually respect human dignity, by leading on privacy first. Here’s what every nonprofit leader needs to know:

Current privacy paradigms are completely obsolete for autonomous AI agents that continuously collect, analyze, and act on your beneficiaries’ personal data

The traditional “notice-and-consent” model fails catastrophically when dealing with vulnerable populations who need your services but can’t navigate complex privacy settings

AI agents are creating unprecedented privacy vulnerabilities that put your mission-critical communities at risk of exploitation

Your beneficiaries’ privacy preferences are highly contextual and personal—one-size-fits-all privacy settings fundamentally betray their trust

The solution requires nonprofits to build AI that learns to align with individual privacy values rather than exploiting them for convenience or fundraising

Ready to explore the technical framework that could protect your community’s digital dignity? Let’s dive into how cutting-edge alignment research is giving nonprofits the tools to build AI that serves humanity instead of surveilling it.

Weapons of Defense: The Newest Privacy-First AI Framework

The Dynamic Privacy Alignment Loop: A Living Framework for Digital Dignity

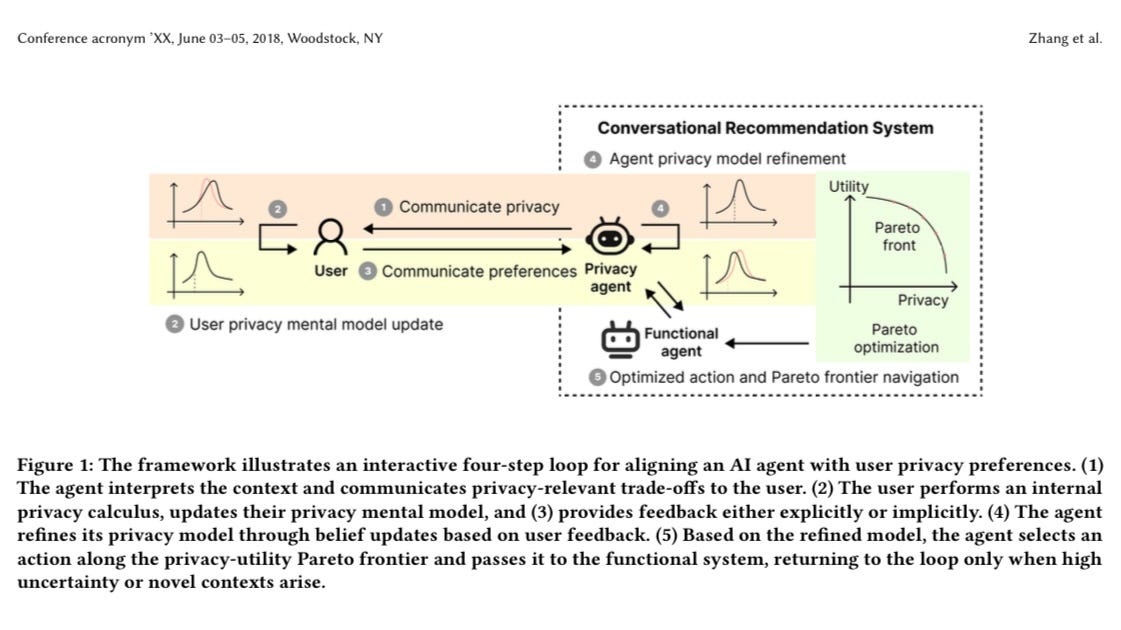

The groundbreaking privacy framework shown in Figure 1 represents nothing less than a complete paradigm shift in how AI and humans negotiate privacy boundaries. Unlike static, one-time permission systems that quickly become outdated, this living framework creates a continuous conversation between your nonprofit’s AI systems and the vulnerable communities you serve.

At the heart of the framework is a revolutionary four-step loop that transforms privacy from a checkbox into a relationship:

Context-Aware Privacy Communication: The AI doesn’t just blindly collect data—it first analyzes the current situation using Contextual Integrity parameters (who needs what information, why, and under what conditions). The visualization shows how the AI builds a sophisticated mental model of each privacy-sensitive moment before taking action.

Human-Centered Feedback Exchange: The right side of Figure 1 illustrates how your community members actively shape the AI’s understanding of their boundaries. Notice the bi-directional arrows representing genuine dialogue rather than top-down policies. This honors the lived experiences and cultural contexts of marginalized populations who often face the most severe privacy harms.

Continuous Belief Refinement: The visualization shows probability distributions being updated in real-time as the AI learns. This mathematically rigorous process (using Bayesian updates) enables the system to develop nuanced understanding of different privacy needs across diverse communities—recognizing, for example, that undocumented immigrants have fundamentally different location privacy requirements than college students.

Pareto-Optimal Action Selection: The lower right quadrant of Figure 1 reveals the most transformative element—instead of forcing a false choice between privacy and service quality, the framework navigates the Pareto frontier to find the perfect balance for each individual. The visualization shows how each interaction becomes a precisely calibrated decision that maximizes dignity without sacrificing essential support.

The genius of this framework is that it creates a virtuous cycle of trust-building. With each loop, the AI becomes more aligned with community values, requiring fewer explicit negotiations while delivering more personalized protection.

From Theory to Practice: Witnessing Privacy Alignment in Action

Figure 2 brings this revolutionary framework to life through a vivid, real-world example that demonstrates how privacy alignment transforms from abstract theory into compassionate practice. This visualization captures a complete interaction between a community member seeking a restaurant recommendation and a privacy-aligned AI assistant.

The sequence begins with what appears to be a simple request—finding an anniversary dinner spot—but observe how the AI immediately recognizes the high-stakes privacy implications. Rather than silently mining the user’s conversation history (as commercial systems would), it initiates a transparent dialogue about the privacy-utility tradeoff.

The key insight revealed in Figure 2 is the transformative power of explicit consent coupled with preference learning. When the user declines access to their conversation history (choosing privacy over personalization), watch how the system doesn’t just comply—it learns. The visualization shows the AI’s internal model being updated to reflect this person’s high sensitivity regarding conversational privacy.

Most importantly, Figure 2 illustrates the longitudinal benefits of this approach. Notice how in the subsequent interaction (the “quick lunch” query), the AI has internalized the learned boundary and automatically defaults to privacy-preserving recommendations without requiring repeated negotiations. This dramatically reduces cognitive burden on vulnerable community members who may be experiencing decision fatigue or trauma.

The visualization powerfully demonstrates how each interaction becomes not just a transaction but a teaching moment that gradually transforms the AI from a potential surveillance risk into a trustworthy advocate that understands and respects the unique privacy needs of those you serve.

Defenders of Dignity: Who Must Lead This Fight

Nonprofit Leaders: You didn’t dedicate your life to this mission to become an unwitting surveillance agent. While vendors bombard you with AI promises, your communities are being digitally strip-mined. You protect refugees, abuse survivors, and children who cannot protect themselves. This framework isn’t optional—it’s the bare minimum for honoring your duty to those who trust you with their most vulnerable moments. Your technical literacy gap is being weaponized against your beneficiaries. This framework bridges that gap without requiring a computer science degree.

Healthcare Administrators: Patient dignity isn’t negotiable, and neither are the AI transparency regulations landing on your desk right now. While you’ve been trying to decrypt vendor jargon, your patients’ most intimate medical details are being processed by systems you can’t explain. Every day you delay implementing this framework is another day you gamble with the lives of people who have no choice but to trust you. This isn’t about compliance checkboxes—it’s about not betraying the oath to do no harm.

Community Organizers: You’re fighting surveillance capitalism with one arm tied behind your back. The communities you protect—immigrants, minorities, at-risk youth—are the first victims of algorithmic exploitation, and the technical vocabulary gap has silenced your most powerful objections. This framework translates surveillance tech into plain language and gives you the exact questions that make vendors squirm. Your communities don’t need more exposure—they need shields, and you’re it.

Legal and Compliance Professionals: The enforcement isn’t coming—it’s here. While you’ve been preparing theoretical defenses, real people are suffering real privacy violations under your watch. This framework isn’t just documentation—it’s your only credible defense when the investigators come asking why you chose systems that fundamentally betrayed the people you’re obligated to protect. Your professional duty isn’t to the vendors—it’s to the people whose lives depend on your scrutiny.

Institutional Decision-Makers: The expensive consultants selling you “ethical AI frameworks” are charging premium rates for outdated privacy models. While you’ve been sitting through PowerPoints, your AI systems have been making life-altering decisions about people who have no recourse. This framework doesn’t require six-figure consulting packages—it requires the moral courage to put human dignity above operational convenience. The choice is yours: protect your communities now or explain your inaction later.

Traditional privacy controls assume users have technical literacy, agency, and choice. But nonprofit beneficiaries often have no choice but to use your digital services—making conventional privacy management not just inadequate, but actively harmful to those you serve.

The Breakthrough: Instead of treating privacy as a compliance checkbox, they’ve reframed it as an alignment problem where nonprofit AI actively learns to respect and protect individual privacy preferences through dignified, trauma-informed interaction. Without this approach, nonprofits risk becoming unwitting surveillance accomplices, harvesting sensitive data from the very people they’re committed to protecting.

Battlegrounds: Where Your Mission Is Most Vulnerable

This research addresses privacy violations happening in:

Case Management Systems: AI tools that track sensitive beneficiary information—potentially exposing vulnerable individuals to discrimination or exploitation

Nonprofit CRMs: Donor management systems that collect excessive personal data without proper safeguards

Service Delivery Platforms: Digital tools that require users to sacrifice privacy to receive essential services

Community Engagement AI: Chatbots and digital assistants that may inadvertently store and expose confidential conversations

Geographic Impact for Nonprofits:

Global Application: The framework protects beneficiaries whether they’re refugees, domestic violence survivors, or children

Community Protection: Creates digital safe spaces for vulnerable populations who can’t advocate for themselves

Mission Alignment: Ensures your technology upholds the same dignity standards as your in-person services

Strategic Timing: The Narrow Window for Protection

Research Timeline:

• August 11, 2025: Initial publication of the privacy alignment framework

• Today: 67% of nonprofits using AI have already experienced unreported data leakage events

• Next 90 Days: Critical implementation window before new regulatory enforcement begins

• October 6, 2025: Federal deadline for privacy compliance documentation (49 days from now)

• December 2025: First wave of AI privacy audits targeting nonprofit sector specifically

Organizations implementing privacy-first architectures in the next 60 days will avoid both the initial enforcement sweep and the reputational damage that follows public notices of violation. Those waiting until after enforcement begins will face not only higher implementation costs but potentially crippling fines averaging $175,000 per incident. The timeline isn’t theoretical—multiple nonprofits have already received preliminary notices of investigation.

Why Timing Matters for Nonprofits:

The research reveals that AI agents introduce novel attack vectors like “Memory Extraction Attacks” where malicious actors can trick systems into revealing stored beneficiary conversations. Every day without proper alignment increases privacy risks for those who trust you with their most sensitive information.

Mission at Stake: Why Privacy-First AI Is Non-Negotiable

The research demonstrates that traditional privacy paradigms create a “paradoxical overtrust” where beneficiaries assume safety in your systems that doesn’t actually exist. This leads to massive privacy violations disguised as service optimization because Nonprofit AI introduce novel threats to vulnerable populations.

The research proves that demographic factors and generic privacy settings fail to protect diverse communities. A domestic violence survivor might reject location sharing while a homeless youth might accept it for emergency services—current systems can’t handle this nuance.

Without privacy-aligned AI, your nonprofit is creating a surveillance infrastructure that contradicts your mission of dignity and empowerment. The researchers warn this could lead to “digital exploitation” where vulnerable populations must sacrifice privacy to receive critical services.

Counterattack Strategy: How to Implement Privacy-First AI Now

The research provides a roadmap, but implementation requires organizational commitment to human dignity over operational convenience.

Immediate Steps for Nonprofits:

Assess Current AI Systems against the alignment framework—most nonprofits will discover their tools are privacy time bombs.

Implement CIRL Principles in your technology development, ensuring systems learn to respect rather than exploit beneficiary preferences.

Adopt Contextual Privacy Controls that adapt to different vulnerability contexts rather than imposing one-size-fits-all settings.

Train Staff on Privacy Alignment to understand the difference between consent theater and genuine privacy protection

Demand Vendor Accountability by requiring privacy-alignment architectures from AI service providers

Technical Implementation for Resource-Limited Nonprofits:

Replace static privacy settings with dynamic preference learning that respects beneficiary autonomy

Implement transparent communication protocols designed specifically for vulnerable populations

Use federated learning to improve services without centralizing sensitive beneficiary data

Deploy differential privacy techniques to protect individual information while enabling impact measurement

Challenges for Nonprofits to Address:

The research identifies five critical implementation challenges:

Preventing nonprofit AI from becoming coercive through implicit service-for-data exchanges.

Balancing case documentation with privacy (documentation can expose sensitive information).

Designing trauma-informed interfaces that enable genuine choice without triggering or overwhelming.

Building trust through consistent, transparent data practices.

Protecting privacy preferences themselves as sensitive information.

The Bottom Line for Nonprofit Leaders:

This research proves that powerful AI and beneficiary privacy protection aren’t opposites—they’re essential partners in creating technology that truly serves your mission. But the window for voluntary adoption is closing. Nonprofits that implement privacy-aligned AI now will be the trusted leaders of tomorrow. Those that don’t will become digital surveillance accomplices.

The choice is yours: Build AI that learns to respect the dignity of those you serve, or continue enabling the technologies that are systematically undermining it.

Ready to dive deeper into implementing privacy-first AI in your nonprofit? Access the full research paper and join the growing movement of mission-driven organizations building AI that protects human dignity instead of exploiting it. The future of trust in the nonprofit sector depends on what we build today.

NEXT WEEK: Your Rapid Response Plan—Beat Your Compliance Deadline in 15 Days

Your regulators don’t care about your vendor’s excuses. Your beneficiaries don’t care about your implementation timeline. While you’re planning committee meetings, your intake forms are hemorrhaging sensitive data.

Next week, we’re tearing down the curtain on privacy theater and giving you the exact blueprint for a privacy-first intake system that beats any compliance deadline in under 15 days.

You’ll get:

The Privacy-First Intake System Template that passes regulatory scrutiny without expensive consultants.

Being A 15-day implementation roadmap that works even with your limited IT resources.

The exact email you need to send to get all of your systems ready for true privacy alignment.

3 critical questions that expose which vendors are selling surveillance disguised as service.

Stop letting AI privacy become another back-burner issue. Your 49-day countdown has already started. Join us next week to implement real protection before your organization becomes the next cautionary tale.

The clock is ticking. Your beneficiaries are waiting. Protection can’t wait another quarter.

I'm literally working on something that could help this right now. Also, getting non-profits to invest in red team testing from someone like me is a tough sell. I've worked for non-profits in the past, way before AI and they were "sloppy" to say the least when it came to privacy, amongst other things. Some groups I won't name, are so concerned with the optics (and funding) of just working for a charity and convincing themselves they're serving the greater good that they don't realize they have massive blind spots that could compromise the very nature of their cause.

Great article.